Introduction

In the realm of big data, Apache Spark and Apache Kafka are two highly influential open-source projects. Both are designed to handle large volumes of data and are critical components in many modern data architectures. However, they address fundamentally different challenges. This blog post provides an in-depth comparison of their core design principles, architectural distinctions, and optimal use cases, aiming to clarify when to choose one, the other, or both.

Apache Spark: A Quick Overview

Apache Spark is best understood as a unified analytics engine designed for large-scale data processing [1]. Its primary purpose is to perform fast and general-purpose cluster computing. Architecturally, a Spark application runs as a set of independent processes on a cluster, coordinated by a driver program that connects to a cluster manager. The driver and executors (processes on worker nodes) run user code. Spark's power comes from its ability to perform in-memory computations using Resilient Distributed Datasets (RDDs) or structured DataFrames/Datasets, which significantly speeds up iterative tasks [2, 3, 4]. Key components like Spark SQL for querying, Structured Streaming for stream processing, MLlib for machine learning, and GraphX for graph analytics extend its capabilities into various data processing domains [4, 5, 6, 7].

![Spark Cluster Overview [20]](https://www.automq.com/cdn-cgi/image/format=avif,quality=90/https://cdn.prod.website-files.com/6809c9c3aaa66b13a5498262/685e6032454fab1b0a3ce262_67480fef30f9df5f84f31d36%252F685e5351b05359450e093d4b_ldOy.png)

Apache Kafka: A Quick Overview

Apache Kafka, at its core, is a distributed event streaming platform [10]. Its fundamental purpose is to enable the ingestion, storage, and processing of continuous streams of records or events in a fault-tolerant and scalable manner. Architecturally, Kafka operates as a cluster of one or more brokers (servers). Data is organized into topics, which are partitioned and replicated across these brokers, forming a distributed commit log [11]. Producers write data to topics, and consumers read from them [13]. Kafka's ecosystem includes Kafka Connect for data integration and Kafka Streams, a client library for building stream processing applications directly on Kafka event streams [15, 9].

![Apache Kafka Architecture [19]](https://www.automq.com/cdn-cgi/image/format=avif,quality=90/https://cdn.prod.website-files.com/6809c9c3aaa66b13a5498262/685ea502ba795363967ee9d2_67480fef30f9df5f84f31d36%252F685e62750df4a037e5bcd8c2_hRzi.png)

The Core Showdown: Spark vs. Kafka - An In-Depth Comparison

While the brief overviews highlight their general functions, a deeper comparison reveals crucial differences that dictate their suitability for various tasks.

Fundamental Design Goals

Apache Spark: Designed as a general-purpose distributed computation engine. Its goal is to execute complex analytical tasks over large datasets, whether they are at rest (batch processing) or in motion (stream processing). Spark's architecture is optimized for data transformations, aggregations, and advanced analytical operations like machine learning [1].

Apache Kafka: Designed as a high-throughput, distributed event streaming platform and durable message store. Its primary aim is to decouple data producers from data consumers, providing a reliable, scalable, and persistent buffer for event streams [10, 11]. Kafka excels at getting data from point A to point B reliably and enabling applications to react to streams of events.

These differing goals fundamentally shape their internal architectures and capabilities. Spark is about what you do with the data, while Kafka is about how you move and access streams of data.

Data Processing Paradigm

Apache Spark: Spark’s core processing model is batch-oriented. It processes data as RDDs or DataFrames, which represent entire datasets or large chunks of data [2, 4]. For stream processing, Spark Streaming (legacy) used a micro-batch approach, treating streams as a sequence of small batches [5]. Structured Streaming, its successor, offers a more advanced model that can operate in micro-batch mode or a continuous processing mode for lower latency, treating a data stream as a continuously updated table [5]. The emphasis is on applying transformations to entire (micro) batches or continuously evolving tables of data.

Apache Kafka: Kafka itself is not a processing engine in the way Spark is; it's a platform for event streams. However, its Kafka Streams library enables a true event-at-a-time processing paradigm [9]. Applications built with Kafka Streams process records one by one as they arrive, allowing for very low latency transformations, filtering, and stateful operations directly on the event flow. The focus is on continuous processing of individual events in motion.

Data Storage and Persistence

Apache Spark: Spark is primarily a processing engine, not a long-term storage system. While it can cache RDDs or DataFrames in memory across executors for performance, this is for intermediate data during a computation's lifecycle [1]. For persistent storage of input or output datasets, Spark relies on external distributed storage systems like Hadoop Distributed File System (HDFS), object stores (e.g., S3), or NoSQL databases.

Apache Kafka: Kafka, in contrast, is a storage system for event streams [11]. It durably writes messages to a distributed commit log on disk within its brokers. Messages are retained for a configurable period (from minutes to indefinitely), allowing Kafka to act as a source of truth for event data. Consumers can replay messages from the past, and this storage capability is central to its design for decoupling and fault tolerance.

Stream Processing Capabilities: Spark Structured Streaming vs. Kafka Streams

This is a key area of comparison where their roles can sometimes seem to overlap, but their approaches differ significantly.

Spark Structured Streaming: Provides a high-level, declarative API built on the Spark SQL engine [5]. It allows users to define streaming computations in the same way they define batch computations on static data. Strengths include its unification of batch and stream processing, strong SQL integration, ability to handle complex event-time processing and windowing, and seamless integration with other Spark components like MLlib. It is well-suited for complex ETL, analytics on streams, and scenarios requiring sophisticated data transformations. Fault tolerance is achieved through checkpointing its progress and state to reliable distributed storage [5].

Kafka Streams: A client library that allows developers to build stream processing logic directly into their Java/Scala applications [9]. It is designed for simplicity and tight integration with the Kafka ecosystem. Strengths include lower latency for per-event processing, simpler deployment (as it's a library, not a separate cluster), and efficient state management using local state stores (e.g., RocksDB) backed by Kafka changelog topics for fault tolerance [9]. It's ideal for building real-time applications and microservices that react to Kafka events, perform enrichments, or maintain state based on event streams.

Architecturally, Spark Structured Streaming jobs run as Spark applications on a Spark cluster. Kafka Streams applications are standalone Java/Scala applications that consume from and produce to Kafka, leveraging Kafka itself for parallelism and fault tolerance of state.

State Management in Streaming

Both streaming engines need to manage state for operations like aggregations or joins over time.

Spark Structured Streaming: Manages state for streaming queries by storing it in memory, on local disk within executors (often using RocksDB), and reliably checkpointing it to distributed fault-tolerant storage (e.g., HDFS, S3) [5]. This ensures that state can be recovered if a Spark executor fails. The state is versioned and tied to the micro-batch or continuous processing model.

Kafka Streams: Manages state in local state stores within the application instances [9]. These stores can be in-memory or disk-based (commonly RocksDB). For fault tolerance, changes to these state stores are written to compact Kafka topics (changelog topics). If an application instance fails, another instance can restore the state from these changelog topics, ensuring no data loss [9]. This approach keeps state local to the processing instance for fast access while leveraging Kafka for durability.

Fault Tolerance Mechanisms

Apache Spark: Achieves fault tolerance for its core computations through the lineage of RDDs. If a partition of an RDD is lost, Spark can recompute it from the original data source using the recorded sequence of transformations [2]. For Spark Streaming and Structured Streaming, fault tolerance is achieved by checkpointing metadata, data, and state to reliable storage, allowing recovery from failures [5].

Apache Kafka: Provides fault tolerance through data replication. Each topic partition can be replicated across multiple brokers. If a broker hosting a partition leader fails, one of the replicas on another broker is elected as the new leader, ensuring that data remains available for production and consumption [11, 12].

Scalability Models

Apache Spark: Scales its computational capacity by distributing data (RDDs/DataFrames) into partitions and executing tasks on these partitions in parallel across multiple executors on worker nodes [3]. Users can increase the number of executors or the resources per executor to scale processing power.

Apache Kafka: Scales by distributing topics across multiple brokers and further dividing topics into partitions [11, 12]. This allows multiple producers to write to different partitions in parallel and multiple consumers (within a consumer group) to read from different partitions in parallel, thus scaling throughput for both reads and writes. Adding more brokers to a Kafka cluster increases its capacity to handle more topics, partitions, and overall load.

Ecosystem and Use Cases Driven by Differences

These fundamental differences naturally lead them to excel in different areas:

Spark is the go-to for:

Complex batch ETL and data warehousing transformations.

Large-scale machine learning model training and serving.

Interactive SQL queries over massive datasets.

Advanced stream analytics requiring complex logic, joins with historical data, or integration with ML models.

Kafka is the preferred choice for:

Building a reliable, scalable central nervous system for real-time event data in an organization.

Decoupling microservices and legacy systems through asynchronous messaging.

Real-time log aggregation and analysis pipelines.

Event sourcing architectures where all changes to application state are stored as a sequence of events.

Powering simpler, low-latency stream processing applications directly within event-driven services using Kafka Streams.

Summary Table: Key Comparative Points

| Feature | Apache Spark | Apache Kafka |

|---|---|---|

| Primary Design | Distributed Computation Engine | Distributed Event Streaming Platform & Message Store |

| Core Function | Data Processing & Analytics | Event Ingestion, Storage & Transport |

| Data Handling | Batch-oriented; processes datasets/micro-batches | Event-oriented; handles continuous streams of individual messages |

| Storage Role | Processing only; relies on external storage for persistence | Internal durable storage system for event streams [11] |

| Streaming Engine | Spark Structured Streaming (cluster-based) [5] | Kafka Streams (library for applications) [9] |

| State in Streaming | Checkpointed to distributed storage/local disk [5] | Local state stores backed by Kafka topics [9] |

| Fault Tolerance | RDD lineage, checkpointing [2, 5] | Data replication across brokers [11] |

Synergy: When Spark and Kafka Work Together

Despite their differences, Spark and Kafka are not mutually exclusive. In fact, they are often used together to create powerful, end-to-end data pipelines [17]. A common architecture involves:

Kafka ingesting high-velocity event streams from diverse sources.

Spark Structured Streaming consuming these streams from Kafka for complex transformations, enrichment (joining with historical data from data lakes), analytics, or machine learning.

The processed data or insights from Spark being written back to Kafka topics for consumption by other real-time applications or dashboards, or loaded into data warehouses or other systems.

This combination leverages Kafka's strengths in scalable and reliable data ingestion and transport with Spark's prowess in sophisticated data processing and analytics.

Conclusion

Choosing between Apache Spark and Apache Kafka, or deciding how to use them together, hinges on understanding their fundamental design differences. Spark is your engine for heavy-duty data computation and advanced analytics, while Kafka provides the robust, scalable backbone for your real-time event streams. It's rarely a question of "either/or" but rather "which tool is best suited for which part of the data lifecycle?" By recognizing their distinct architectural approaches to processing, storage, streaming, state management, and fault tolerance, engineers can design more effective, efficient, and resilient data architectures.

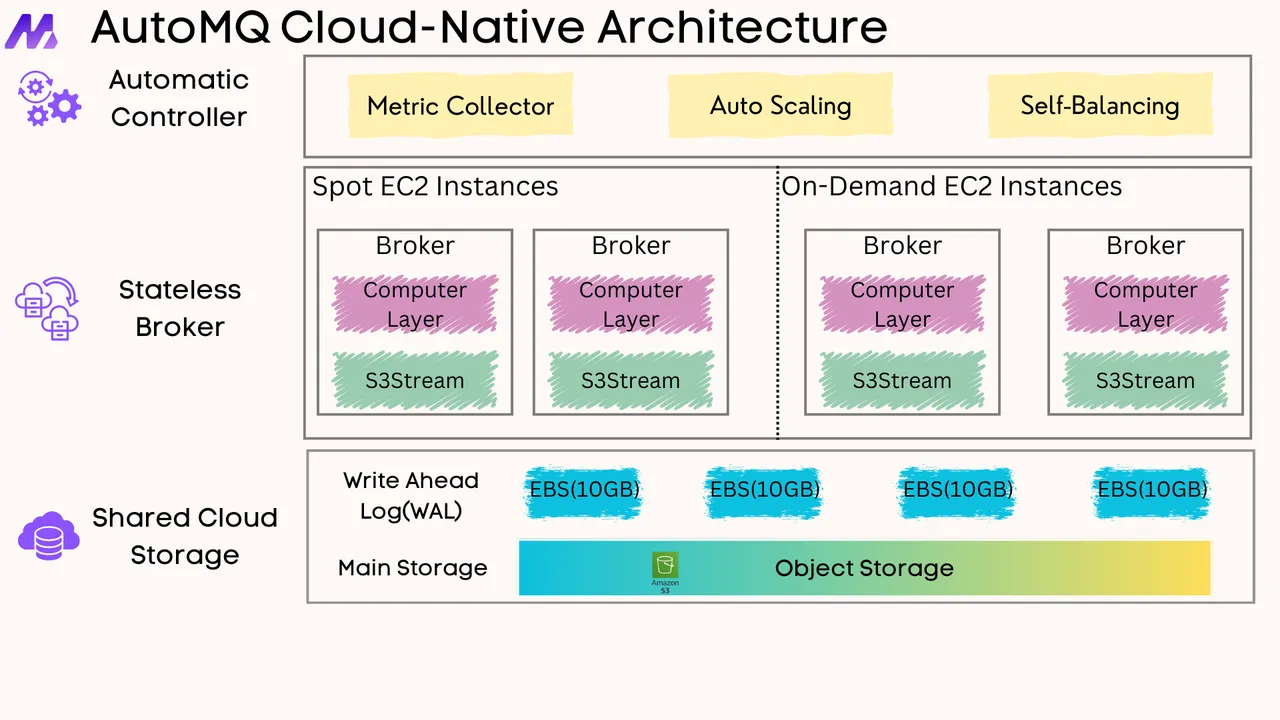

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)