Overview

The Kafka Command Line Interface (CLI) is an interactive shell environment that provides developers and administrators with a powerful set of tools to manage Apache Kafka resources programmatically. As the fastest and most efficient interface for interacting with a Kafka cluster, the CLI offers essential functionality for creating and configuring topics, producing and consuming messages, managing consumer groups, and monitoring cluster health. This comprehensive guide explores the Kafka CLI's capabilities, common use cases, configuration options, best practices, and troubleshooting approaches to help you effectively leverage this versatile toolset.

Understanding Kafka CLI Tools

Kafka CLI tools consist of various shell scripts located in the /bin directory of the Kafka distribution. These scripts provide a wide range of functionality for interacting with Kafka clusters, managing topics, producing and consuming messages, and handling administrative tasks. The CLI is particularly valuable for quick testing, troubleshooting, and automation without requiring code development.

Essential Kafka CLI Commands

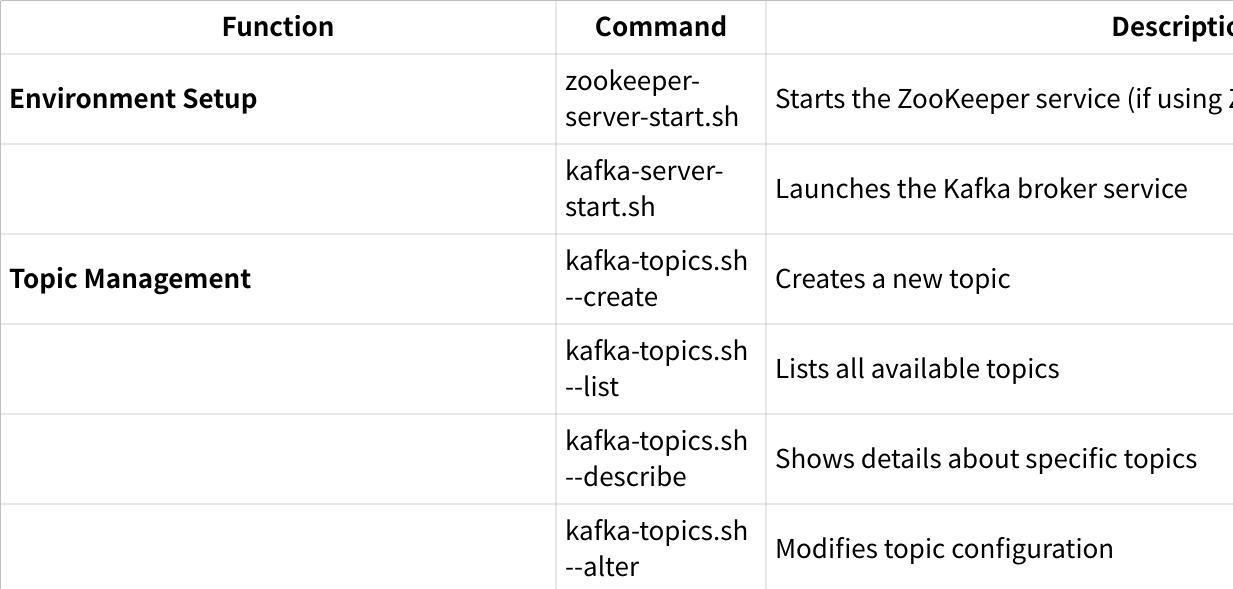

The following table presents the most commonly used Kafka CLI commands organized by function:

Let's examine each of these categories in more detail with their specific usage patterns.

Topic Management Commands

Topic management is one of the most common uses of the Kafka CLI. Here are detailed commands for managing Kafka topics:

## Create a topic with 3 partitions and replication factor of 1

bin/kafka-topics.sh --bootstrap-server localhost:9092 --create --topic my-topic --partitions 3 --replication-factor 1

## List all topics in the cluster

bin/kafka-topics.sh --bootstrap-server localhost:9092 --list

## Describe a specific topic

bin/kafka-topics.sh --bootstrap-server localhost:9092 --describe --topic my-topic

## Add partitions to an existing topic

bin/kafka-topics.sh --bootstrap-server localhost:9092 --alter --topic my-topic --partitions 6

## Delete a topic (if delete.topic.enable=true)

bin/kafka-topics.sh --bootstrap-server localhost:9092 --delete --topic my-topic

These commands allow administrators to create, monitor, modify, and remove topics as needed[1][2].

Producer and Consumer Commands

The CLI provides tools for producing messages to topics and consuming messages from topics:

## Start a console producer to send messages to a topic

bin/kafka-console-producer.sh --bootstrap-server localhost:9092 --topic my-topic

## Start a console consumer to read messages from a topic

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic my-topic

## Consume messages from the beginning of a topic

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic my-topic --from-beginning

## Consume messages as part of a consumer group

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic my-topic --group my-group

These commands enable interactive testing of message production and consumption, which is valuable for debugging and verification[3].

Consumer Group Management

Consumer groups can be managed and monitored using these commands:

## List all consumer groups

bin/kafka-consumer-groups.sh --bootstrap-server localhost:9092 --list

## Describe a consumer group (shows partitions, offsets, lag)

bin/kafka-consumer-groups.sh --bootstrap-server localhost:9092 --describe --group my-group

## Reset offsets for a consumer group

bin/kafka-consumer-groups.sh --bootstrap-server localhost:9092 --reset-offsets --group my-group --topic my-topic --to-earliest --execute

## Delete a consumer group

bin/kafka-consumer-groups.sh --bootstrap-server localhost:9092 --delete --group my-group

These commands help in monitoring consumer progress, diagnosing performance issues, and managing consumer offsets[2].

Common Use Cases for Kafka CLI

The Kafka CLI serves several important use cases that make it an essential tool for Kafka administrators and developers.

Testing and Verification

The CLI is ideal for quickly testing Kafka cluster functionality. For example, you can verify that messages can be successfully produced and consumed:

## Terminal 1: Start a consumer

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test-topic

## Terminal 2: Produce test messages

bin/kafka-console-producer.sh --bootstrap-server localhost:9092 --topic test-topic

Data Backfilling

When you need to import historical data into Kafka, the console producer can read data from files:

## Import data from a file to a Kafka topic

cat data.json | bin/kafka-console-producer.sh --bootstrap-server localhost:9092 --topic my-topic

This approach is useful for one-time data imports or testing with sample datasets[3].

Shell Scripting and Automation

The Kafka CLI can be incorporated into shell scripts to automate operations, such as monitoring logs or performing scheduled administrative tasks. For example:

#!/bin/bash

while true

do

sleep 60

new_checksum=$(md5sum $LOGFILE | awk '{ print $1 }')

if [ "$new_checksum" != "$checksum" ]; then

# Produce the updated log to the security log topic

kafka-console-producer --topic full-security-log --bootstrap-server localhost:9092 < security_events.log

fi

done

This makes it easy to incorporate Kafka operations into broader automation workflows[3].

Configuration and Setup

Installation and Basic Setup

To use Kafka CLI tools, you need to have Apache Kafka installed:

Download Kafka from the Apache Kafka website

Extract the downloaded file:

tar -xzf kafka_2.13-3.1.0.tgzNavigate to the Kafka directory:

cd kafka_2.13-3.1.0Set up environment variables (optional but recommended):

export KAFKA_HOME=/path/to/kafka

export PATH=$PATH:$KAFKA_HOME/bin

Starting the Kafka Environment

For a basic development environment, you need to start ZooKeeper (if using ZooKeeper mode) and then Kafka:

## Start ZooKeeper (if using ZooKeeper mode)

bin/zookeeper-server-start.sh config/zookeeper.properties

## Start Kafka

bin/kafka-server-start.sh config/server.properties

Secure Connections

For secure Kafka clusters, additional configuration is needed. Common authentication methods include:

SASL Authentication

bin/kafka-topics.sh --bootstrap-server kafka:9092 --command-config client.properties --list

Where client.properties contains:

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="user" password="password";

SSL Configuration

bin/kafka-console-producer.sh --bootstrap-server kafka:9093 --producer.config client-ssl.properties --topic my-topic

These security configurations ensure that CLI tools can connect to secured Kafka clusters[4].

Best Practices for Kafka CLI

General Best Practices

Use scripts for repetitive tasks : Create shell scripts for common operations to ensure consistency.

Set default configurations : Use configuration files with the

-command-configparameter to avoid typing the same options repeatedly.Test in development first : Always test commands in a development environment before executing in production.

Document commands : Maintain documentation of frequently used commands and their parameters.

Production Environment Considerations

Limit direct access : Restrict access to production Kafka CLI tools to authorized administrators only.

Use read-only operations : Prefer read-only operations (like

-describeand-list) when possible.Double-check destructive commands : Carefully verify commands that modify or delete data before executing them.

Handle encoded messages carefully : When working with encoded messages, ensure consumers use the same schema as producers[3].

Performance Optimization

Batch operations : When possible, batch related operations to minimize connections to the Kafka cluster.

Be careful with

-from-beginning: Avoid using this flag on large topics as it may overload the system.Use specific partitions : When debugging, specify partitions directly to limit the amount of data processed.

Monitor resource usage : Keep an eye on CPU and memory usage when running resource-intensive CLI commands.

Troubleshooting Common Issues

When working with Kafka CLI, you may encounter various issues. Here are some common problems and their solutions:

Broker Connectivity Issues

Problem : Unable to connect to Kafka brokers

Solutions :

Verify that broker addresses in

-bootstrap-serverare correctCheck network connectivity and firewall rules

Ensure the Kafka brokers are running

Verify that security configuration matches broker settings[5]

Topic Management Issues

Problem : Topic creation failing

Solutions :

Check if the Kafka cluster has sufficient resources

Verify that topic configuration is valid

Ensure you have necessary permissions

Check if a topic with the same name already exists[5]

Consumer Group Issues

Problem : Consumer group not working properly

Solutions :

Use

kafka-consumer-groups.shto verify current statusCheck consumer configurations

Verify permissions for the consumer group

Ensure the topic exists and has messages[6]

Conclusion

The Kafka CLI provides a powerful and efficient way to interact with Kafka clusters, offering essential functionality for developers and administrators. By understanding the available commands, following best practices, and knowing how to troubleshoot common issues, you can effectively leverage the CLI for various Kafka operations.

For simple tasks and administrative operations, the CLI remains the fastest and most direct approach. For more complex scenarios or when a graphical interface is preferred, alternative tools like Conduktor, Redpanda Console, or Confluent Control Center can complement the CLI experience.

Whether you're testing a new Kafka setup, troubleshooting issues, or automating operations, mastering the Kafka CLI is essential for anyone working with Kafka in development or production environments.

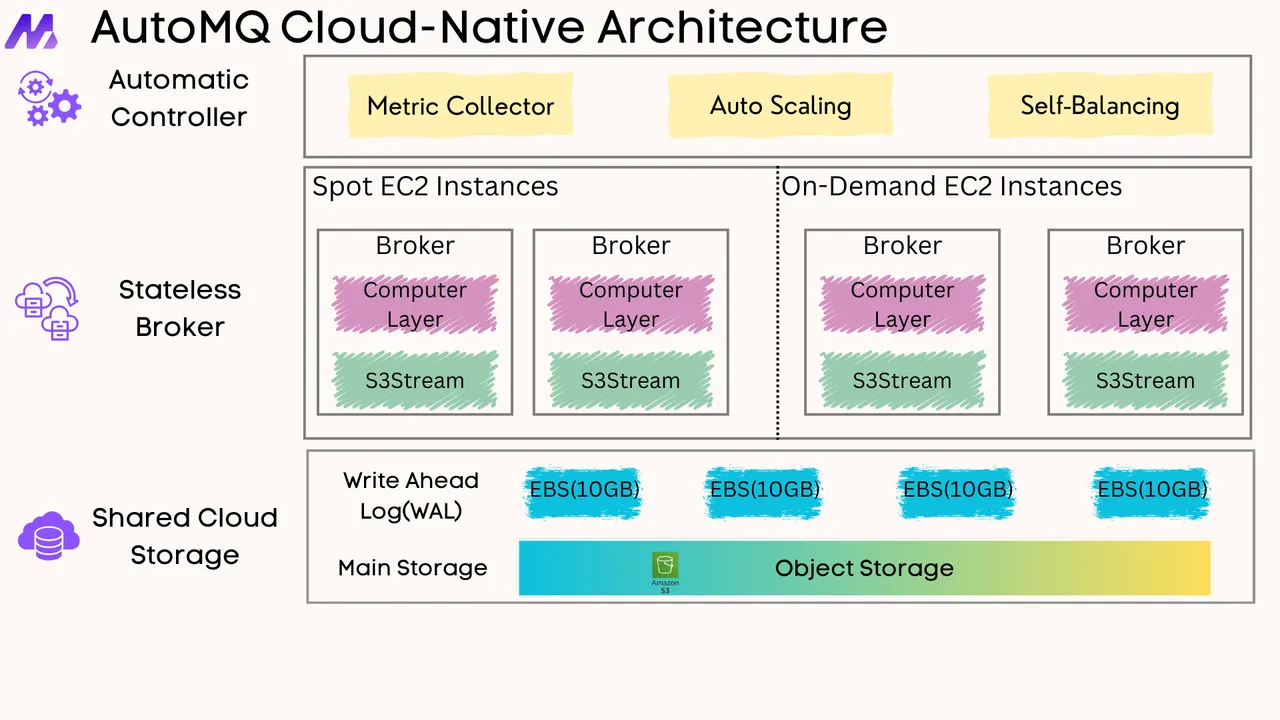

If you find this content helpful, you might also be interested in our product AutoMQ. AutoMQ is a cloud-native alternative to Kafka by decoupling durability to S3 and EBS. 10x Cost-Effective. No Cross-AZ Traffic Cost. Autoscale in seconds. Single-digit ms latency. AutoMQ now is source code available on github. Big Companies Worldwide are Using AutoMQ. Check the following case studies to learn more:

Grab: Driving Efficiency with AutoMQ in DataStreaming Platform

Palmpay Uses AutoMQ to Replace Kafka, Optimizing Costs by 50%+

How Asia’s Quora Zhihu uses AutoMQ to reduce Kafka cost and maintenance complexity

XPENG Motors Reduces Costs by 50%+ by Replacing Kafka with AutoMQ

Asia's GOAT, Poizon uses AutoMQ Kafka to build observability platform for massive data(30 GB/s)

AutoMQ Helps CaoCao Mobility Address Kafka Scalability During Holidays

JD.comx AutoMQ x CubeFS: A Cost-Effective Journey at Trillion-Scale Kafka Messaging

.png)